Flutter audio plugin using SoLoud library

Flutter audio plugin using SoLoud library and FFI

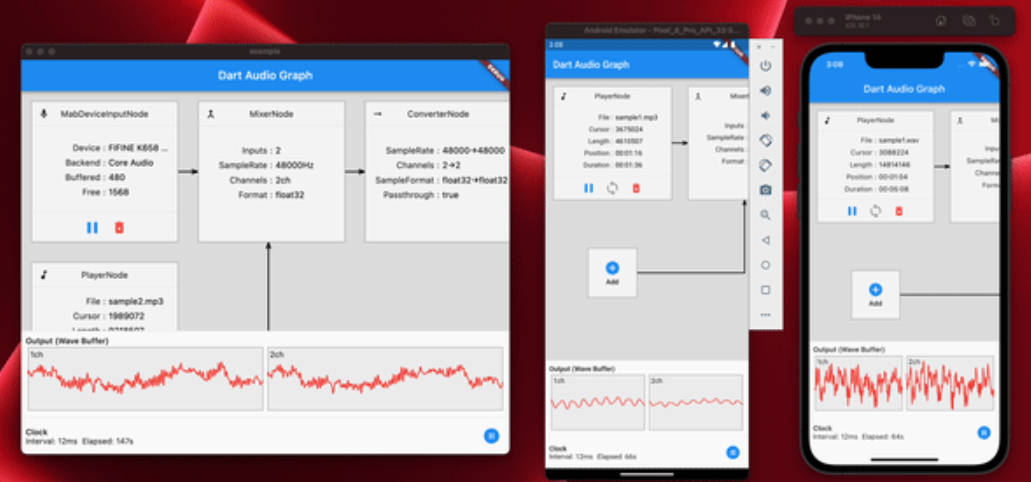

| Linux | Windows | Android | MacOS | iOS | web |

|---|---|---|---|---|---|

| 💙 | 💙 | 💙 | 💙 | 💙 | 😭 |

- Supported on Linux, Windows, Mac, Android, and iOS

- Multiple voices, capable of playing different sounds simultaneously or even repeating the same sound multiple times on top of each other

- Includes a speech synthesizer

- Supports various common formats such as 8, 16, and 32-bit WAVs, floating point WAVs, OGG, MP3, and FLAC

- Enables real-time retrieval of audio FFT and wave data

Overview

The flutter_soloud plugin utilizes a forked repository of SoLoud, where the miniaudio audio backend has been updated and is located in src/soloud as a git submodule.

To ensure that you have the correct dependencies, it is mandatory to clone this repository using the following command:

git clone --recursive https://github.com/alnitak/flutter_soloud.git

If you have already cloned the repository without the recursive option, you can navigate to the repository directory and execute the following command to update the git submodule:

git submodule update --init --recursive

For information regarding the SoLoud license, please refer to this link.

There are 3 examples:

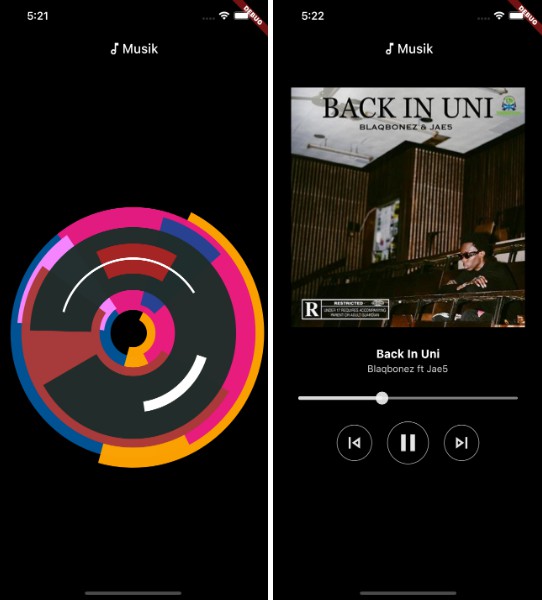

The 1st is a simple use-case.

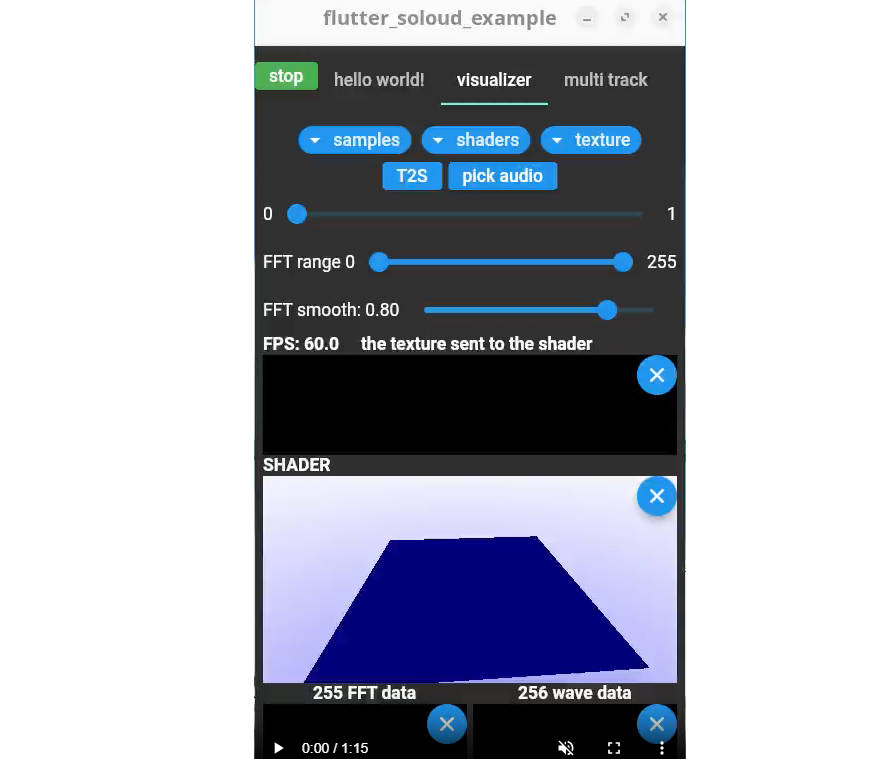

The 2nd aims to show a visualization of frequencies and wave data.

The file [Visualizer.dart] uses getAudioTexture2D to store new audio data into audioData on every tick.

The video below illustrates how the data is then converted to an image (the upper widget) and sent to the shader (the middle widget).

The bottom widgets use FFT data on the left and wave data represented with a row of yellow vertical containers with the height taken from audioData on the right.

The getAudioTexture2D returns an array of 512×256. Each row contains 256 Floats of FFT data and 256 Floats of wave data, making it possible to write a shader like a spectrogram (shader #8) or a 3D visualization (shader #9).

Shaders from 1 to 7 are using just 1 row of the audioData. Therefore, the texture generated to feed the shader should be 256×2 px. The 1st row represents the FFT data, and the 2nd represents the wave data.

Since many operations are required for each frame, the CPU and GPU can be under stress, leading to overheating of a mobile device.

It seems that sending an image (with setImageSampler()) to the shader is very expensive. You can observe this by disabling the shader widget.

soloud6.mp4

The 3rd example demonstrates how to manage sounds using their handles: every sound should be loaded before it can be played. Loading a sound can take some time and should not be done during gameplay, for instance, in a game. Once a sound is loaded, it can be played, and every instance of that same audio will be identified by its handle.

The example shows how you can have background music and play a fire sound multiple times.

soloud6-B.mp4

Usage

First of all, AudioIsolate must be initialized:

Future<bool> start() async{

final value = AudioIsolate().startIsolate();

if (value == PlayerErrors.noError) {

debugPrint('isolate started');

return true;

} else {

debugPrint('isolate starting error: $value');

return false;

}

}

When succesfully started a sound can be loaded:

Future<SoundProps?> loadSound(String completeFileName) {

final load = await AudioIsolate().loadFile(completeFileName);

if (load.error != PlayerErrors.noError) return null;

return load.sound;

}

The [SoundProps] returned:

class SoundProps {

SoundProps(this.soundHash);

// the [hash] returned by [loadFile]

final int soundHash;

/// handles of this sound. Multiple instances of this sound can be

/// played, each with their unique handle

List<int> handle = [];

/// the user can listed ie when a sound ends or key events (TODO)

StreamController<StreamSoundEvent> soundEvents = StreamController.broadcast();

}

soundHash and handle list are then used in the AudioIsolate() class.

The AudioIsolate instance

The AudioIsolate instance has the duty of receiving commands and sending them to a separate Isolate, while returning the results to the main UI isolate.

| Function | Returns | Params | Description |

|---|---|---|---|

| startIsolate | PlayerErrors | – | Start the audio isolate and listen for messages coming from it. |

| stopIsolate | bool | – | Stop the loop, stop the engine, and kill the isolate. Must be called when there is no more need for the player or when closing the app. |

| isIsolateRunning | bool | – | Return true if the audio isolate is running. |

| initEngine | PlayerErrors | – | Initialize the audio engine. Defaults are: Sample rate 44100, buffer 2048, and Miniaudio audio backend. |

| dispose | – | – | Stop the audio engine. |

| loadFile | ({PlayerErrors error, SoundProps? sound}) | String fileName |

Load a new sound to be played once or multiple times later. |

| play | ({PlayerErrors error, SoundProps sound, int newHandle}) | SoundProps sound, {double volume = 1,double pan = 0,bool paused = false,} |

Play an already loaded sound identified by [sound]. |

| speechText | ({PlayerErrors error, SoundProps sound}) | String textToSpeech |

Speech from the given text. |

| pauseSwitch | PlayerErrors | int handle |

Pause or unpause an already loaded sound identified by [handle]. |

| getPause | ({PlayerErrors error, bool pause}) | int handle |

Get the pause state of the sound identified by [handle]. |

| stop | PlayerErrors | int handle |

Stop an already loaded sound identified by [handle] and clear it. |

| stopSound | PlayerErrors | int handle |

Stop ALL handles of the already loaded sound identified by [soundHash] and clear it. |

| getLength | ({PlayerErrors error, double length}) | int soundHash |

Get the sound length in seconds. |

| seek | PlayerErrors | int handle, double time |

Seek playing in seconds. |

| getPosition | ({PlayerErrors error, double position}) | int handle |

Get the current sound position in seconds. |

| getIsValidVoiceHandle | ({PlayerErrors error, bool isValid}) | int handle |

Check if a handle is still valid. |

| setVisualizationEnabled | – | bool enabled |

Enable or disable getting data from getFft, getWave, getAudioTexture*. |

| getFft | – | Pointer<Float> fft |

Returns a 256 float array containing FFT data. |

| getWave | – | Pointer<Float> wave |

Returns a 256 float array containing wave data (magnitudes). |

| getAudioTexture | – | Pointer<Float> samples |

Returns in samples a 512 float array.- The first 256 floats represent the FFT frequencies data [0.0 |

| getAudioTexture2D | – | Pointer<Pointer<Float>> samples |

Return a floats matrix of 256×512.Every row is composed of 256 FFT values plus 256 wave data.Every time is called, a new row is stored in the first row and all the previous rows are shifted up (the last will be lost). |

| setFftSmoothing | – | double smooth |

Smooth FFT data.When new data is read and the values are decreasing, the new value will be decreased with an amplitude between the old and the new value. This will result in a less shaky visualization.0 = no smooth1 = full smoothThe new value is calculated with:newFreq = smooth * oldFreq + (1 - smooth) * newFreq |

The PlayerErrors enum:

| name | description |

|---|---|

| noError | No error |

| invalidParameter | Some parameter is invalid |

| fileNotFound | File not found |

| fileLoadFailed | File found, but could not be loaded |

| dllNotFound | DLL not found, or wrong DLL |

| outOfMemory | Out of memory |

| notImplemented | Feature not implemented |

| unknownError | Other error |

| backendNotInited | Player not initialized |

| nullPointer | null pointer. Could happens when passing a non initialized pointer (with calloc()) to retrieve FFT or wave data |

| soundHashNotFound | The sound with specified hash is not found |

| isolateAlreadyStarted | Audio isolate already started |

| isolateNotStarted | Audio isolate not yet started |

| engineNotStarted | Engine not yet started |

AudioIsolate() has a StreamController which can be used, for now, only to know when a sound handle reached the end:

StreamSubscription<StreamSoundEvent>? _subscription;

void listedToEndPlaying(SoundProps sound) {

_subscription = sound!.soundEvents.stream.listen(

(event) {

/// Here the [event.handle] of [sound] has naturally finished

/// and [sound.handle] doesn't contains [envent.handle] anymore.

/// Not passing here when calling [AudioIsolate().stop()]

/// or [AudioIsolate().stopSound()]

},

);

}

it has also a StreamController to monitor when the engine starts or stops:

AudioIsolate().audioEvent.stream.listen(

(event) {

/// event == AudioEvent.isolateStarted

/// or

/// event == AudioEvent.isolateStopped

},

);

Contribute

To use native code, bindings from Dart to C/C++ are needed. To avoid writing these manually, they are generated from the header file (src/ffi_gen_tmp.h) using package:ffigen and temporarily stored in lib/flutter_soloud_bindings_ffi_TMP.dart. You can generate the bindings by running dart run ffigen.

Since I needed to modify the generated .dart file, I followed this flow:

- Copy the function declarations to be generated into

src/ffi_gen_tmp.h. - The file

lib/flutter_soloud_bindings_ffi_TMP.dartwill be generated automatically. - Copy the relevant code for the new functions from

lib/flutter_soloud_bindings_ffi_TMP.dartintolib/flutter_soloud_bindings_ffi.dart.

Additionally, I have forked the SoLoud repository and made modifications to include the latest Miniaudio audio backend. This backend is in the [new_miniaudio] branch of my fork and is set as the default.

Project structure

This plugin uses the following structure:

-

lib: Contains the Dart code that defines the API of the plugin relative to all platforms. -

src: Contains the native source code. Linux, Android and Windows have their own CmakeFile.txt file in their own subdir to build the code into a dynamic library. -

src/soloud: contains the SoLoud sources of my fork

Debugging

I have provided the necessary settings in the .vscode directory for debugging native C++ code on both Linux and Windows. To debug on Android, please use Android Studio and open the project located in the example/android directory. However, I am not familiar with the process of debugging native code on Mac and iOS.

Linux

If you encounter any glitches, they might be caused by PulseAudio. To troubleshoot this issue, you can try disabling PulseAudio within the linux/src.cmake file. Look for the line add_definitions(-DMA_NO_PULSEAUDIO) and uncomment it (now it is the default behavior).

Android

The default audio backend is miniaudio, which will automatically select the appropriate audio backend based on your Android version:

- AAudio with Android 8.0 and newer.

- OpenSL|ES for older Android versions.

Windows

For Windows users, SoLoud utilizes Openmpt through a DLL, which can be obtained from https://lib.openmpt.org/. If you wish to use this feature, install the DLL and enable it by modifying the first line in windows/src.cmake.

Openmpt functions as a module-playing engine, capable of replaying a wide variety of multichannel music formats (669, amf, ams, dbm, digi, dmf, dsm, far, gdm, ice, imf, it, itp, j2b, m15, mdl, med, mid, mo3, mod, mptm, mt2, mtm, okt, plm, psm, ptm, s3m, stm, ult, umx, wow, xm). Additionally, it can load wav files and may offer better support for wav files compared to the stand-alone wav audio source.

iOS

On the simulator, the Impeller engine doesn’t work (20 Lug 2023). To disable it, run the following command:

flutter run --no-enable-impeller

Unfortunately, I don’t have a real device to test it.

Web

I put in a lot of effort to make this to work on the web! 🙁

I have successfully compiled the sources with Emscripten. Inside the web directory, there’s a script to automate the compiling process using the CmakeLists.txt file. This will generate libflutter_soloud_web_plugin.wasm and libflutter_soloud_web_plugin.bc.

Initially, I tried using the wasm_interop plugin, but encountered errors while loading and initializing the Module.

Then, I attempted using web_ffi, but it seems to have been discontinued because it only supports the old dart:ffi API 2.12.0, which cannot be used here.

TODOs

Many things can still be done.

The FFT data doesn’t match my expectations. Some work still needs to be done on Analyzer::calcFFT() in src/analyzer.cpp.

|

|

|---|---|

| flutter_soloud spectrum | audacity spectrum |

For now, only a small portion of the possibilities offered by SoLoud have been implemented. Look here.

- audio filter effects

- 3D audio

- TED and SID soundchip simulator (Commodore 64/plus)

- noise and waveform generation and much more I think!