Audio Waveforms

Use this plugin to generate waveforms while recording audio in any file formats supported by given encoders or from audio files. We can use gestures to scroll through the waveforms or seek to any position while playing audio and also style waveforms.

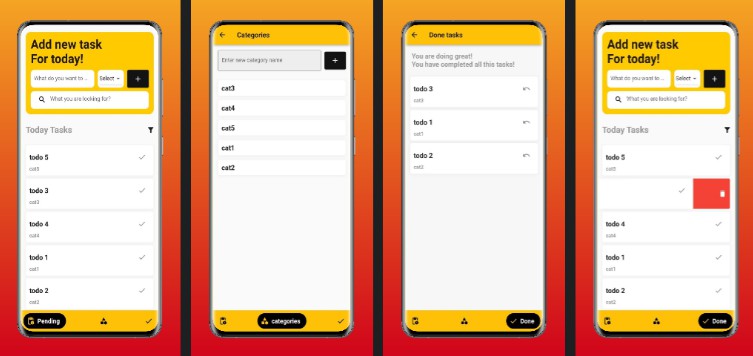

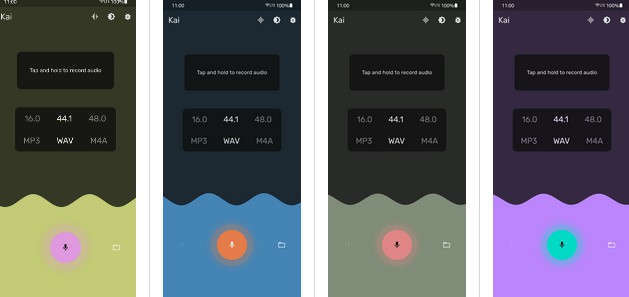

Preview

Recorder

Platform specific configuration

Android

Change the minimum Android sdk version to 21 (or higher) in your android/app/build.gradle file.

minSdkVersion 21

Add RECORD_AUDIO permission in AndroidManifest.xml

<uses-permission android:name="android.permission.RECORD_AUDIO" />

IOS

Add this two rows in ios/Runner/Info.plist

<key>NSMicrophoneUsageDescription</key>

<string>This app is requires Mic permission.</string>

This plugin requires ios 10.0 or higher. So add this line in Podfile

platform :ios, '10.0'

Installing

- Add dependency to

pubspec.yaml

dependencies:

audio_waveforms: <latest-version>

Get the latest version in the ‘Installing’ tab on pub.dev

- Import the package.

import 'package:audio_waveforms/audio_waveforms.dart';

Usage

- Initialise RecorderController

late final RecorderController recorderController;

@override

void initState() {

super.initState();

recorderController = RecorderController();

}

- Use

AudioWaveformswidget in widget tree

AudioWaveforms(

size: Size(MediaQuery.of(context).size.width, 200.0),

recorderController: recorderController,

),

- Start recording (it will also display waveforms)

await recorderController.record();

You can provide file name with extension and full path in path parameter of record function. If not provided .aac is the default extension and dateTime will be the file name.

- Pause recording

await recorderController.pause();

- Stop recording

final path = await recorderController.stop();

Calling this will save the recording at provided path and it will return path to that file.

- Disposing RecorderController

@override

void dispose() {

recorderController.dispose();

super.dispose();

}

Additional feature

- Scroll through waveform

AudioWaveforms(

enableGesture: true,

),

By enabling gestures, you can scroll through waveform in recording state or paused state.

- Refreshing the wave to initial position after scrolling

recorderController.refresh();

Once scrolled waveform will stop updating position with newly added waves while recording so we can use this to get waves updating again. It can also be used in paused/stopped state.

- Changing style of the waves

AudioWaveforms(

waveStyle: WaveStyle(

color: Colors.white,

showDurationLabel: true,

spacing: 8.0,

showBottom: false,

extendWaveform: true,

showMiddleLine: false,

),

),

- Applying gradient to waves

AudioWaveforms(

waveStyle: WaveStyle(

gradient: ui.Gradient.linear(

const Offset(70, 50),

Offset(MediaQuery.of(context).size.width / 2, 0),

[Colors.red, Colors.green],

),

),

),

- Show duration of the waveform

AudioWaveforms(

waveStyle: WaveStyle(showDurationLabel: true),

),

- Change frequency of wave update and normalise according to need and platform

late final RecorderController recorderController;

@override

void initState() {

super.initState();

recorderController = RecorderController()

..updateFrequency = const Duration(milliseconds: 100)

..normalizationFactor = Platform.isAndroid ? 60 : 40;

}

- Using different types of encoders and sample rate

late final RecorderController recorderController;

@override

void initState() {

super.initState();

recorderController = RecorderController()

..androidEncoder = AndroidEncoder.aac

..androidOutputFormat = AndroidOutputFormat.mpeg4

..iosEncoder = IosEncoder.kAudioFormatMPEG4AAC

..sampleRate = 16000;

}

- Listening scrolled duration position

recorderController.currentScrolledDuration.addListener((duration){});

To use this shouldCalculateScrolledPosition flag needs to be enabled. Duration is

in milliseconds.

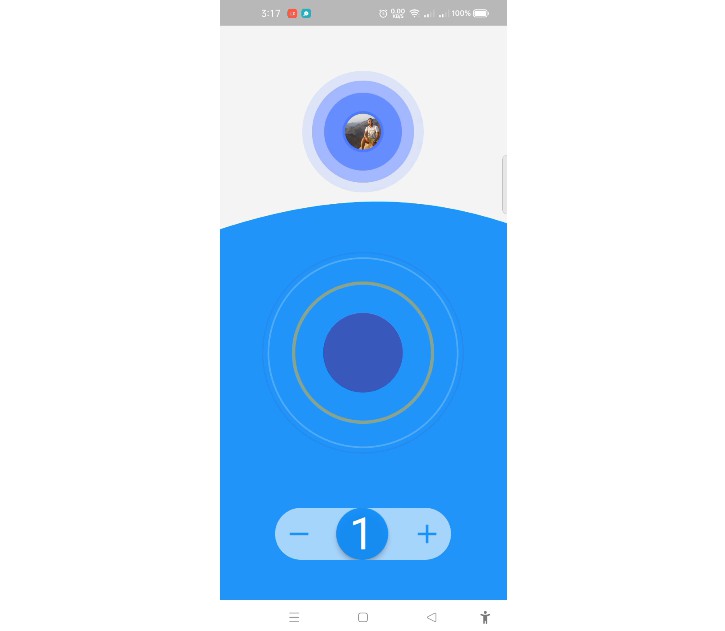

Player

Usage

- Initialise PlayerController

late PlayerController playerController;

@override

void initState() {

super.initState();

playerController = PlayerController();

}

- Prepare player

await playerController.preparePlayer(path);

Provide the audio file path in the parameter. You can also set volume with optional parameter.

- Use widget in widget-tree

AudioFileWaveforms(

size: Size(MediaQuery.of(context).size.width, 100.0),

playerController: playerController,

)

- Start player

await playerController.startPlayer();

As default when audio ends it will be seeked to start but you can pass false let it stay at end.

- Pause player

await playerController.pausePlayer();

- Stop player

await playerController.stopPlayer();

- Disposing the playerController

@override

void dispose() {

playerController.dispose();

super.dispose();

}

Additional feature

- Set volume for the player

await playerController.setVolume(1.0);

- Seek to any position

await playerController.seekTo(5000);

- Get current/max duration of audio file

final duration = await playerController.getDuration(DurationType.max);

- Seek using gestures

AudioFileWaveforms(

enableSeekGesture: true,

)

Audio also can be seeked using gestures on waveforms (enabled by default). 5. Ending audio with different modes

await playerController.startPlayer(finishMode: FinishMode.stop);

Using FinishMode.stop will stop the player, FinishMode.pause will pause the player and

FinishMode.loop will loop the player.

- Listening to player state changes

playerController.onPlayerStateChanged.listen((state) {});

- Listening to current duration

playerController.onCurrentDurationChanged.listen((duration) {});

Duration is in milliseconds.