Video Call Flutter App ?

Description:

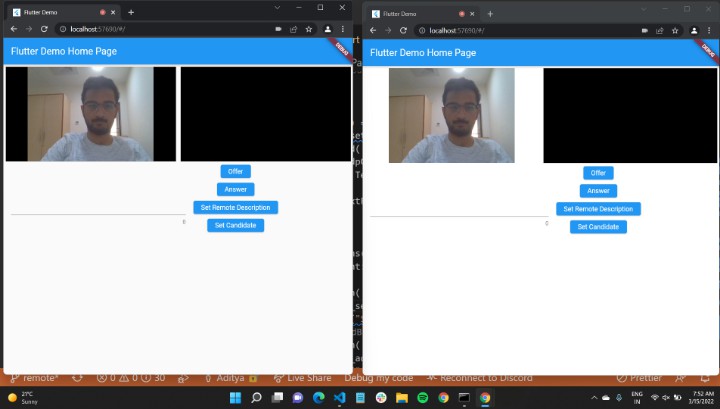

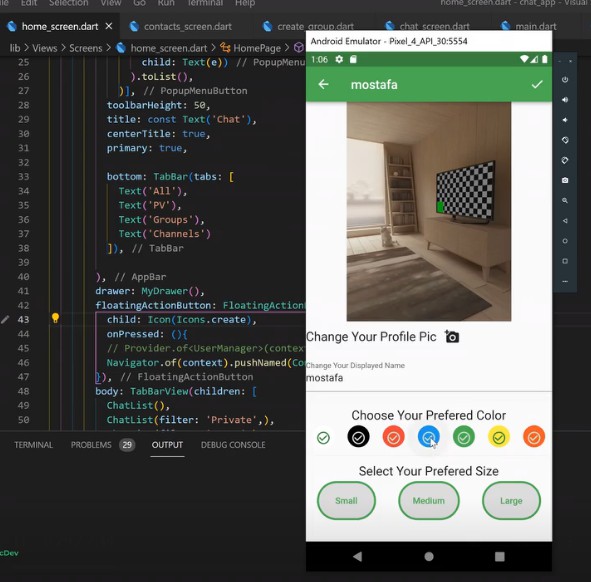

- This is sandbox video call application using Flutter and WebRTC, you can call from browser to browser, phone to phone, browser to phone and opposite.

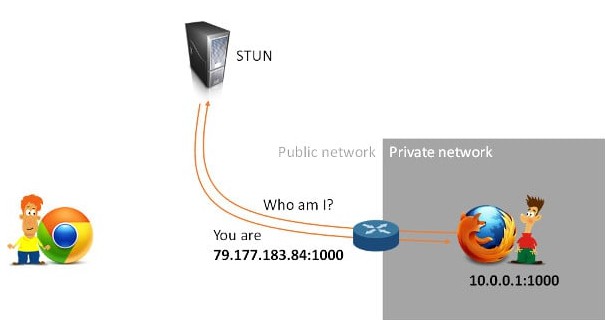

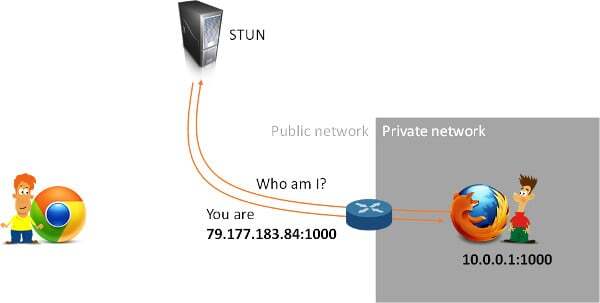

How does it work?

- ? Client 1 and Client 2 create peer connection by request create to Server STUN (url stun server: stun:stun.l.google.com:19302)

- Client 1 request Server STUN create offer

- Server STUN will response sdp text and type is “offer” to Client 1

- Client 1 need copy sdp text, type and send to Client 2 then ** Client 2** set peer connection remote to sdp of Client 1

- Client 2 create answer for Client 1

- Server STUN will response sdp text,type(is “answer”) and candidate string to Client 2

- Client 2 need copy above responses and send to Client 1

- Client 1 set peer connection remote to sdp of Client 2 and add candidate of Client 2

- Okay, Client 1 and 2 connected…

Multiple peers possible?

- I found 3 ways for do it!

? Option 1: Mesh Model

- It looks similar to WebRTC basic P2P, with this model if there are 6 or more users the performance will be very bad.

? Option 2: MCUs – Multipoint Control Units

- MCUs are also referred to as Multipoint Conferencing Units. Whichever way you spell it out, the basic functionality is shown in the following diagram.

- Each peer in the group call establishes a connection with the MCU server to send up its video and audio. The MCU, in turn, makes a composite video and audio stream containing all of the video/audio from each of the peers, and sends that back to everyone.

- Regardless of the number of participants in the call, the MCU makes sure that each participant gets only one set of video and audio. This means the participants’ computers don’t have to do nearly as much work. The tradeoff is that the MCU is now doing that same work. So, as your calls and applications grow, you will need bigger servers in an MCU-based architecture than an SFU-based architecture. But, your participants can access the streams reliably and you won’t bog down their devices.

- Media servers that implement MCU architectures include Kurento (which Twilio Video is based on), Frozen Mountain, and FreeSwitch.

? Option 3: SFUs – Selective Forwarding Units

- In this case, each participant still sends just one set of video and audio up to the SFU, like our MCU. However, the SFU doesn’t make any composite streams. Rather, it sends a different stream down for each user. In this example, 4 streams are received by each participant, since there are 5 people in the call.

- The good thing about this is it’s still less work on each participant than a mesh peer-to-peer model. This is because each participant is only establishing one connection (to the SFU) instead of to all other participants to upload their own video/audio. But, it can be more bandwidth intensive than the MCU because the participants each receive multiple streams downloaded.

- The nice thing for participants about receiving separate streams is that they can do whatever they want with them. They are not bound to layout or UI decisions of the MCU. If you have been in a conference call where the conferencing tool allowed you to choose a different layout (ie, which speaker’s video will be most prominent, or how you want to arrange the videos on the screen), then that was using an SFU.

- Media servers which implement an SFU architecture include Jitsi and Janus.