eneural_net

eNeural.net / Dart is an AI Library for efficient Artificial Neural Networks. The library is portable (native, JS/Web, Flutter) and the computation is capable to use SIMD (Single Instruction Multiple Data) to improve performance.

Usage

import 'package:eneural_net/eneural_net.dart';

import 'package:eneural_net/eneural_net_extensions.dart';

void main() {

// Type of scale to use to compute the ANN:

var scale = ScaleDouble.ZERO_TO_ONE;

// The samples to learn in Float32x4 data type:

var samples = SampleFloat32x4.toListFromString(

[

'0,0=0',

'1,0=1',

'0,1=1',

'1,1=0',

],

scale,

true, // Already normalized in the scale.

);

var samplesSet = SamplesSet(samples, subject: 'xor');

// The activation function to use in the ANN:

var activationFunction = ActivationFunctionSigmoid();

// The ANN using layers that can compute with Float32x4 (SIMD compatible type).

var ann = ANN(

scale,

// Input layer: 2 neurons with linear activation function:

LayerFloat32x4(2, true, ActivationFunctionLinear()),

// 1 Hidden layer: 3 neurons with sigmoid activation function:

[HiddenLayerConfig(3, true, activationFunction)],

// Output layer: 1 neuron with sigmoid activation function:

LayerFloat32x4(1, false, activationFunction),

);

print(ann);

// Training algorithm:

var backpropagation = Backpropagation(ann, samplesSet);

print(backpropagation);

print('\n---------------------------------------------------');

var chronometer = Chronometer('Backpropagation').start();

// Train the ANN using Backpropagation until global error 0.01,

// with max epochs per training session of 1000000 and

// a max retry of 10 when a training session can't reach

// the target global error:

var achievedTargetError = backpropagation.trainUntilGlobalError(

targetGlobalError: 0.01, maxEpochs: 50000, maxRetries: 10);

chronometer.stop(operations: backpropagation.totalTrainingActivations);

print('---------------------------------------------------\n');

// Compute the current global error of the ANN:

var globalError = ann.computeSamplesGlobalError(samples);

print('Samples Outputs:');

for (var i = 0; i < samples.length; ++i) {

var sample = samples[i];

var input = sample.input;

var expected = sample.output;

// Activate the sample input:

ann.activate(input);

// The current output of the ANN (after activation):

var output = ann.output;

print('- $i> $input -> $output ($expected) > error: ${output - expected}');

}

print('\nglobalError: $globalError');

print('achievedTargetError: $achievedTargetError\n');

print(chronometer);

}

Output:

ANN<double, Float32x4, SignalFloat32x4, Scale<double>>{ layers: 2+ -> [3+] -> 1 ; ScaleDouble{0.0 .. 1.0} ; ActivationFunctionSigmoid }

Backpropagation<double, Float32x4, SignalFloat32x4, Scale<double>, SampleFloat32x4>{name: Backpropagation}

---------------------------------------------------

Backpropagation> [INFO] Started Backpropagation training session "xor". { samples: 4 ; targetGlobalError: 0.01 }

Backpropagation> [INFO] Selected initial ANN from poll of size 100, executing 600 epochs. Lowest error: 0.2451509315860858 (0.2479563313068569)

Backpropagation> [INFO] (OK) Reached target error in 2317 epochs (107 ms). Final error: 0.009992250436771877 <= 0.01

---------------------------------------------------

Samples Outputs:

- 0> [0, 0] -> [0.11514352262020111] ([0]) > error: [0.11514352262020111]

- 1> [1, 0] -> [0.9083549976348877] ([1]) > error: [-0.0916450023651123]

- 2> [0, 1] -> [0.9032943248748779] ([1]) > error: [-0.09670567512512207]

- 3> [1, 1] -> [0.09465821087360382] ([0]) > error: [0.09465821087360382]

globalError: 0.009992250436771877

achievedTargetError: true

Backpropagation{elapsedTime: 111 ms, hertz: 83495.49549549549 Hz, ops: 9268, startTime: 2021-05-26 06:25:34.825383, stopTime: 2021-05-26 06:25:34.936802}

SIMD (Single Instruction Multiple Data)

Dart has support for SIMD when computation is made using Float32x4 and Int32x4.

The Activation Functions are implemented using Float32x4, improving

performance by 1.5x to 2x, when compared to normal implementation.

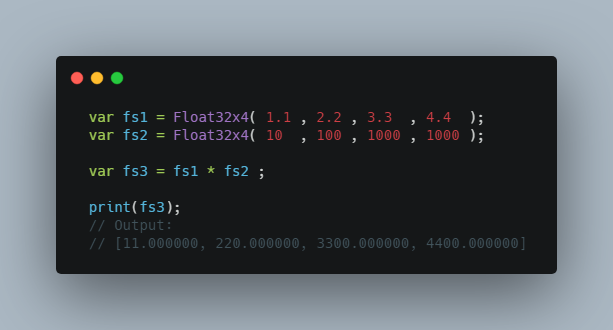

The basic principle with SIMD is to execute math operations simultaneously in 4 numbers.

Float32x4 is a lane of 4 double (32 bits single precision floating points).

Example of multiplication:

var fs1 = Float32x4( 1.1 , 2.2 , 3.3 , 4.4 );

var fs2 = Float32x4( 10 , 100 , 1000 , 1000 );

var fs3 = fs1 * fs2 ;

print(fs3);

// Output:

// [11.000000, 220.000000, 3300.000000, 4400.000000]

See "dart:typed_data library" and "Using SIMD in Dart".

Signal

The class Signal represents the collection of numbers (including its related operations)

that will flow through the ANN, representing the actual signal that

an Artificial Neural Network should compute.

The main implementation is SignalFloat32x4 and represents

an ANN Signal based in Float32x4. All the operations prioritizes the use of SIMD.

The framework of Signal allows the implementation of any kind of data

to represent the numbers and operations of an [eNeural.net] ANN. SignalInt32x4

is an experimental implementation to exercise an ANN based in integers.

Activation Functions

ActivationFunction is the base class for ANN neurons activation functions:

-

ActivationFunctionSigmoid:The classic Sigmoid function (return for

xa value between0.0and1.0):activation(double x) { return 1 / (1 + exp(-x)) ; } -

ActivationFunctionSigmoidFast:Fast approximation version of Sigmoid function, that is not based in exp(x):

activation(double x) { x *= 3 ; return 0.5 + ((x) / (2.5 + x.abs()) / 2) ; }Function author: Graciliano M. Passos: [gmpassos@GitHub][github].

-

ActivationFunctionSigmoidBoundedFast:Fast approximation version of Sigmoid function, that is not based in exp(x),

bounded to a lower and upper limit for [x].activation(double x) { if (x < lowerLimit) { return 0.0 ; } else if (x > upperLimit) { return 1.0 ; } x = x / scale ; return 0.5 + (x / (1 + (x * x))) ; }Function author: Graciliano M. Passos: [gmpassos@GitHub][github].

exp(x)

exp is the function of the natural exponent,

e, to the power x.

This is an important ANN function, since is used by the popular

Sigmoid function, and usually a high precision version is slow

and approximation versions can be used for most ANN models and training

algorithms.

Fast Math

An internal Fast Math library is present and can be used for platforms

that are not efficient to compute exp (Exponential function).

You can import this library and use it to create a specialized

ActivationFunction implementation or use it in any kind of project:

import 'package:eneural_net/eneural_net_fast_math.dart' as fast_math ;

void main() {

// Fast Exponential function:

var o = fast_math.exp(2);

// Fast Exponential function with high precision:

var highPrecision = <double>[0.0 , 0.0];

var oHighPrecision = fast_math.expHighPrecision(2, 0.0, highPrecision);

// Fast Exponential function with SIMD acceleration:

var o32x4 = fast_math.expFloat32x4( Float32x4(2,3,4,5) );

}

The implementation is based in the Dart package Complex:

The fast_math.expFloat32x4 function was created by Graciliano M. Passos ([gmpassos@GitHub][github]).